I gave the keynote address to this year's Open University Computing & Communications Associate Lecturer's conference on Friday last:

Who will control the AI computerised machines: A whistlestop tour through our digital dystopia.

A lightly edited transcript of my remarks follows.

Several months ago Michael Bowkis approached me and asked if I’d be willing to do the opening keynote for the C&C tutor conference this year.

I said, you know Michael, I’ve been thinking about a universal theory of accountability, transparency, fairness and respect for human rights in automated systems and communications infrastructure.

Michael, being the very practical man that he is, gently pointed out that it would be nice to have some people actually show up for the event.

So, in deference to his wisdom, welcome to this evening’s talk: Who will control the computerised machines: a whistlestop tour through our digital dystopia.

Politicians?

Should we vest control of these machines in the hands of our elected leaders? Given the UK’s recent experiments on that front perhaps we should move swiftly on.

Ai-Da

But before we do, there was an enlightening exercise in how politicians enable themselves to be caught up in AI hype when this robot was rolled in front of a House of Lords committee, supposedly to give evidence last month. The creator had to be provided with the questions in advance to prepare answers. The robot could then deliver the pre-prepared answers when the members of the committee asked the question clearly enough for the device’s speech recognition software to identify which of the questions was being asked.

The robot gave up and shut down part way through the session and the inventor put sunglasses on it when rebooting to hide the “quite interesting faces” it makes on start up. If politicians are going to get involved in the serious business of controlling computing machines, they should not be participating in PR stunts taking evidence from robots. They need to be hearing from roboticists, computer scientists, engineers, ethicists, lawyers, designers, security specialists, multidisciplinary experts, to help them understand what computers can and more importantly cannot do.

Part of the reason I amended the original title of this talk from who will control the AI machines to who will control the computerised machines is I’m tired of the all the hype surrounding AI that interferes with the ability to enable people to sort the snake oil sales in this space from the substantive progress. There's so much AI and so called ‘smart’ technology hype and there are zero consequences for selling vapourware or making wild predictions that turn out to be wrong. From thermostats to cities, dolls to motorways, tens of billions of things and systems are being connected to the internet and labelled “smart” or imbued with so called AI. Often it is unnecessary, compromising, invasive, dangerous inappropriate or creepy.

Not "smart" but...

Next time someone lazily, ignorantly, cynically, exploitatively or in a techno utopian sales pitch, including breathless promises of how the technology will come to know us and respond to our needs, uses the term “smart” in connection with technology, try substituting words like these instead.

And ask them some pointed questions like:

What problem are you trying to solve?

How well does your supposed tech “solution” work?

What other problems does it cause?

How much does it cost – not just in economic terms but in social, political or environmental costs?

Is it worth it?

Too often you’ll find by the time you get to question 5, you’ll find the answer is no.

Privacy invading toothbrush

This is a privacy invading toothbrush and a phone you can install its privacy invading app on. Typically privacy invading toothbrushes use sensors to assess how well you are brushing your teeth, sending that data, wirelessly, to some third party who may send it to other third parties. In the US it may include the provider of your health insurance. People whose brushing aligns most closely with what the manufacturer’s or insurance companies’ algorithms consider best practice may be entitled to cheaper insurance. More algorithmically determined careless brushers get penalised. People who can’t afford privacy invading toothbrushes get penalised even more. This btw applies to all the gadgets, apps and systems slurping up health data and the digital ecosystems funnelling that data to health insurers and other equivalent economic actors.

But we need not worry about this in the UK, right? We have the NHS. Free at the point of use. Relatively. If we can wait long enough for access to intensely dedicated but increasingly exhausted medical staff.

Peter Thiel

Meet Peter Thiel. Mr Thiel is the billionaire founder of PayPal and the data analytics company Palantir; and first external investor in Facebook.

When Covid hit, out of the goodness of his heart, Mr Thiel offered Palantir’s services to the NHS for the grand sum of £1, an offer enthusiastically accepted by then health secretary, Hancock of the jungle. The contract was not subject to competitive tender, quickly morphed into a £1 million pound contract; and Since then media reports suggest Palantir have secured £37 million in NHS contracts.

They are currently bidding for and are hot favorites to get a 5 year, initially £350 million contract, essentially to become the information nervous system of the NHS – the core operating system for managing all patient data in England. The contract could, ultimately be worth more than £1 billion over ten years.

In 2019 Ernst & Young calculated that NHS data could be worth about £10 Billion per year.

Palantir’s main clients are the CIA (also an early major investor in the company), the US military, the FBI, the NSA, US police forces and the brutal US Immigration & Customs Enforcement agency (ICE). So the company doesn’t appear to be an immediately obvious fit with the NHS.

If we pull on the thread of data management in the NHS it leads to a whole host of horror stories but that is not the object of this evening’s exercise. If you are interested in that subject, however, I’d recommend starting with a brilliant, small charitable organisation, called medConfidential, as well as everything the security engineering group at Cambridge University, led by Professor Ross Anderson, has researched and written on the subject.

So should Mr Thiel and Palantir control the computing machines that control patient data in the NHS? Quite a few campaigning groups think not…

The invisible hand of the market?

Even if a group of civil rights organisations don’t trust Palantir to take control of the NHS, perhaps the market should be the ultimate authority of who controls the computing machines. I don’t mean the Grand Bazaar in Istanbul but Adam Smith’s invisible hand of the market that Western society puts so much faith in. Supply and demand, serviced by commercial organisations.

Well, the market has supplied us with the internet of things, tens of billions of physical devices with little or no security that are now connected to the internet, primarily as a promotional feature but also for surveillance capitalism…

My friend Cayla

This is My friend Cayla – not my friend you understand but that was the label on the packaging it was sold in – a talking doll produced by Genesis Toys. It used rudimentary speech recognition and a mobile app to search the internet for answers to use to have a conversation with the child. It was awarded the Innovative Toy of the Year in 2014 and it was in the top ten toys sold in Europe that year. In February 2017 the German Federal Network Agency banned the doll as an illegal spying device, under section 90 of the German Telecommunications Act. Section 90 [Misuse of Transmitting Equipment] prohibits devices with surreptitious recording & transmission capabilities, passing themselves off as something innocuous.

The Norwegian Consumer Council criticised the doll for enabling the collection of children’s conversations – these were secretly transmitted to US company Nuance – for marketing and other commercial purposes, and the sharing of data with other third parties. The dolls were also programmed to do sales pitches for other goods and services, like Disney films with whom the producer of the dolls had commercial ties.

Lexus remote services

On the 31st of October a bunch of remote services for certain Lexis cars were discontinued – including Automatic Collision Notification, Enhanced Roadside Assistance, Stolen Vehicle Locator, Remote Door Lock/Unlock, Vehicle Finder, and Maintenance Alerts. The features were provided on a subscription basis and Lexis generously offered to refund subscriptions for time periods that ran beyond the end of October 2022. According to Lexis, however, the only way to fix the issue and get access to these remote services again was to buy a new car.

Ok so the market is not really looking all that promising as a reliable source of control for the computing machines. But what about the big tech companies? They are the experts after all. They understand the technology better than anyone.

Let’s face it, GAFAM and data cartels like Experian and the ad tech industry and other commercial actors have one primary motive – profit – they want to sell us stuff or monetise profiling and categorising of people, to influence our behaviour and sell us stuff.

Aaand there’s gold in them there data collection hills.

Aaaand we make it easy. We even hand over truckloads of cash for their gadgets and services and install them in our homes and lives to enable them to spy on us

We have sleepwalked into a surveillance society, like then well-trained compliant consumers we are, trading our privacy for the convenience, attraction, addiction, access to silicon valley’s elixir of digital goods and services.

There’s always Apple right? Tim Cook has made massive waves about the importance of privacy. In a speech in January last year... let me see, where is the quote... it said “If we accept as normal and unavoidable that everything in our lives can be aggregated and sold, then we lose so much more than data. We lose the freedom to be human.”

He has repeatedly criticised other big tech companies like Facebook and even left Mark Zuckerberg allegedly stunned by the suggestion, after Zuck sought his advice in the wake the Cambridge Analytica scandal, that Facebook should delete all data they collect from outside FB, Instagram and WhatsApp.

Facebook btw tracks users wherever they go on the Net whether they agree to it or not. Aa far as I know, the OU still has Facebook tracking pixels on our VLE, though I have not checked that in recent months. That’ll be your homework for the weekend. Let me know what you find.

So Apple as the saviour then?

Nope.

Just last week, a couple of researchers have discovered “Apple collects extremelydetailed information on users with its own apps even when they turn off tracking, an apparent direct contradiction of Apple’s own description of how the privacy protection works.”

On the plus side Mr Cook is technically within the bounds of his advice to Mr Zuckerberg, keeping his spying within Apple's walled garden. But Apple are still, sadly, well ahead of the other big four. For example, in 2020 they made it easy to opt out of third party surveillance on iPhones which 96% of users duly did. That move reportedly cost Facebook alone $10 billion and put a dent in Tim and Mark’s friendship. But last week’s evidence of how Apple fail on privacy is not unique.

Just shows what a low base we’re prepared to accept…

What about the data brokers and the adtech industry? Well you know, that’s just too dark a rabbit warren to even take a peek at this evening but if you are interested in that, one of the most engaging commentators on the matter and on the massive GDPR breach that is real time bidding for webpage ads, is Johnny Ryan of the Irish Council for Civil Liberties. [At this point I proved incapable of saying "civil liberties" without tripping over my words so have edited that bit]

Amazon Ring

Before we move on from the big five, Amazon should get an honourable (or, depending on your point of view, dishonourable) mention.

First Amazon sold Echo & Alexa devices for people to enable Amazon to spy on them. They shift huge volumes of these things. I’m sure a lot of you listening have one of more of them in your homes and other corners of your lives. Aamm Amazingly enough, the ever-growing installed base of these things also led to a measurable increase in the frequency and overall spending of those people at... you'll never guess where... Amazon.

Then Amazon got into direct sales of their proprietary surveillance system, Amazon Ring. It’s good business for Amazon to have people spy on their neighbours and they have put an enormous amount of resources into promoting and selling this system aggressively. The ads stoke up fear and claim Amazon are not watching families but watching over them.

They also entered partnerships with police departments. Those police departments essentially becoming sales forces for the company, encouraging communities to buy the technology in exchange for promises of more responsive protection from the police. And the quid pro quo for the police was Amazon giving them free access to surveillance footage, often handing it over without the owners’ permission. Amazon also supply police forces with email details, so they can email Ring users directly and ask them to share video footage. Em now according to the EFF roughly half of the law enforcement agencies that partner with Amazon have been responsible for at least one fatal encounter in the five years up to 2020.

In July 22, 48 rights bases em NGOs asked the Federal Trade Commission in the US to ban Amazon Ring and equivalent privatised surveillance systems.

Eh for those of you in the UK who have invested in Amazon Ring, do remember you have data controller responsibilities under the UK GDPR regulations, even though you do not necessarily have direct control over all the data that your Ring system is collecting.

So I would venture to propose, at this point, that Jeff Bezos’ Amazon is not amongst the top level controllers we need for our computerised machines. And if we are to heed the warnings of those 48 NGOs maybe we should be looking at wrestling some of the control he already has away from him and his big tech peers?

It's only data...

Buuuut it’s only data. Why worry?

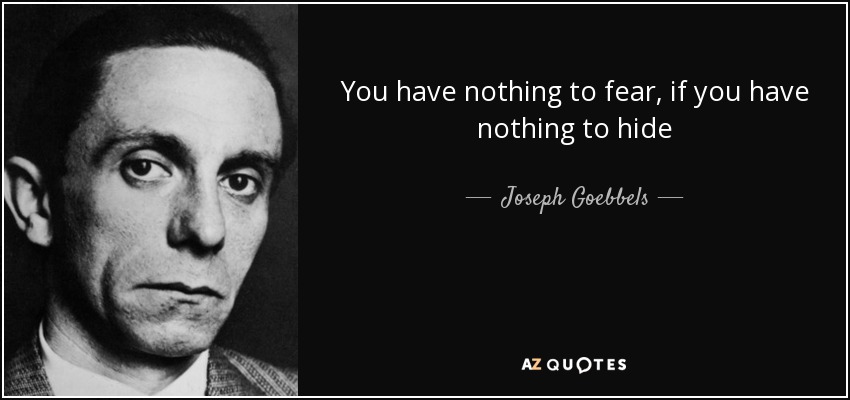

If we have nothing to hide, we have nothing to fear right?

Let’s just obliterate that nasty, insidious, dangerous but powerful little soundbite before going any further.

The nothing to hide… claim is based on 2 enormous false assumptions:

Firstly, that privacy is only about bad people hiding bad things.

And secondly that eliminating privacy will solve a whole host of complex problems such as terrorism.

That first assumption is just plain stupid.

As for the second, if, after a quarter of a century of ubiquitous, digital technology enabled, mass surveillance, you still think problems like terrorism or organised crime can be solved by decimating privacy, I wonder if I could interest you in the purchase of a couple of bridges, one in London, one in Brooklyn?

Never, ever again let someone get away with framing a discussion about privacy with the vicious and deceitful nothing to hide deceit.

And remember Cardinal Richelieu’s warning that he could find enough evidence for a death sentence in a mere six lines written by the most honest man.

Robot vacuum cleaners are secretly mapping people’s homes, maps the manufacturers sell to other third parties. The very popular Strava fitness app that posts maps of it users’ activity revealed the locations of secret military bases and the habits of service personnel.

Anomaly Six is a private intelligence company that includes the military amongst its customers. Anomaly Six claims to have access to commercial GPS location data from 3 billion mobile devices mapped to 2 billion email addresses. Anomaly Six have also offered superficially credible evidence, that it amongst the people it has been spying on are staff at the NSA, the US military and CIA. I'm sure that made them popular in those corridors.

On the government side US law enforcement and intelligence agencies, to bypass a 2018 US Supreme Court ruling [Carpenter v US, 22 June 2018] that declared the government needs a warrant to get mobile companies to turn over location data, are buying commercially available databases containing that data.

The Muslim Pro prayer and Qur’an app sent user location data to a data broker called X-Mode, a company which in turn sold that data to US military & intelligence contractors. Many more apps contained X-mode’s tracking software, apps dealing in dating, travel, weather guides, compasses, games, health & fitness, religious, QR readers, shopping, messaging and more. Researcher at CrackedLabs, Wolfie Christl found 199 apps so infected. X-Mode, having been banned by Apple and Google app stores when this news came out, was bought by a bigger company Digital Envoy and is now known as Outlogic. Digital Envoy promised not to funnel mobile location data to defence contractors.

Cybersecurity company, Avast, reported that detections of stalkerware increased by 83% during the first covid lockdown of 2020. Location tracking can be a matter of life and death for domestic abuse victims.

More tracking

Stardust was the number 1 period tracking app in the Apple app store in June 2022. Why is that important? Well June 2022 was when the US Supreme Court decided, in the Dobbs v Jackson case, that the US constitution does not confer the right to have an abortion. Stardust, in the company’s terms and conditions, state that they will share user data... with law enforcement... without a warrant. Data marketplaces are selling data of users of some of the most popular period tracking apps such as Clue.

Police and criminal justice authorities are increasingly using AI and automated decision making systems.

Police forces in the UK have been found by the courts to have been deploying face recognition systems unlawfully on the streets. The Court of Appeal said there were “fundamental deficiencies” in the legal framework covering this technology. South Wales police leadership declare themselves proud there has never been an unlawful arrest as a result of using the technology in South Wales. But conservative estimates suggest they may have collected and are retaining the biometric facial data of over half a million people without their consent. And there were numerous detentions, if not arrests.

AI is being used to “predict” criminal behaviour. As the Fair Trials group point out... where's that quote... let me dig this out... “these predictions, profiles, and risk assessments can influence, inform, or result in policing and criminal justice outcomes, including constant surveillance, stop and search, fines, questioning, arrest, detention, prosecution, sentencing, and probation. They can also lead to non-criminal justice punishments, such as the denial of welfare or other essential services, and even the removal of children from their families.” And there have been some genuinely horrifying cases, arising em from the use of this technology.

Earlier this year Fair Trials, European Digital Rights (EDRi) and 43 other civil society organisations called on the EU to ban predictive policing systems.

Dangerous pseudo sciences equivalent to eugenics and phrenology, are making a come back, hidden behind the veneer of supposed neutral, scientific rationality of AI and other algorithmic decision making systems. No kidding – researchers at Harrisburg University in the US claim to have developed software that can predict criminality based on a facial image. And police are using DNA to generate images of suspects they have never seen.

State institutions are hiding behind algorithms to discriminate. The passport application website uses an automated check to detect photos which do not meet Home Office rules. Women with dark skin are more than twice as likely to have their passports rejected compared to white men. Photos of women with the darkest skin were four times more likely to be rejected than women with the lightest skin.

Before we even get to the programmers' skills or values or the rules/specifications their bosses or clients set for producing the algorithms, we need to remember data sets used for training AI have inherent biases. Often they will hardwire state institutions’ historical structural discrimination into the automated decision making system. AI reproduces and reinforces discrimination.

Then once the AI has chomped away at the data and churned out its decision there remains bias in way people accept or reject results of that AI, if there is a legitimately/credibly empowered human element in the decision making process at all.

AI offers a form of statistical optimisation as a solution to social problems and state’s management of state institutions and services. Yet in practice it can end up intensifying existing bureaucratic structural and cultural and racial inequity and prejudice. AI and other such automated systems are political technologies, the uses of which are completely enmeshed in and dependant on the social contexts in which they are deployed.

As currently deployed in the criminal justice, social welfare and immigration systems in particular these systems are causing overwhelming harm to already marginalised groups. AI systems are pervasive and pose countless ethical and social justice questions we are simply not dealing with.

There’s a whole minefield of a story to tell about the EU’s plans for a universal biometric border control system... eemm and you should look up a case called iBorderCtrl... em which is itself a a minefield but I’ll just offer you eemmm Senior Research Associate at Oxford’s Institute for Ethics in AI, Elizabeth Renieris’ take for this evening,

“The process around the EES raises a host of important questions about the European Union’s approach to technology governance. Specifically, what becomes of fundamental rights when law makers can choose to override or sidestep them with such ease? What does it mean when corporate values displace democratic ones vis-à-vis digital technologies? What does it mean to protect and secure data more than we protect and safeguard the people who are most directly impacted by technologies harvesting that data? And what happens when the fundamental rights of one group are privileged over those of another?”

Up to now I’ve been labouring on data issues. Yet it’s not just data anymore but about what the algorithms chewing on the data are telling the tens of billions of physical devices and systems now connected to the internet to do. Cyber physical IoT gadgets and systems taking actions in real meatspace.

Sure we can laugh at wine bottles that won’t pour if they lose their charge, smart cups that tell you what you’re drinking as long as what you are drinking is water or Amazon re-order buttons. Good luck if you find yourself in this situation – maybe Amazon can fly one of their drones through your bathroom window to the rescue.

But unsecured IoT devices can threaten the network itself – the Mirai botnet took down a chunk of the internet backbone in October 2016, in a denial of service attack on DNS service provider Dyn. Resulting in outages at Twitter, Netflix, Airbnb and hundreds of other sites.

Short blackouts of popular websites is one thing. What about pacemakers? Medical implant manufacturers historically never thought too much about security. Now hackers can interfere, untraceably, with communications to and from a pacemaker, switch it off, make it malfunction, drain the battery. Manufacturers, medics, patients and legislators need to prioritise security as an ongoing process. When you integrate software into a device you make it hackable. When you then connect that device to the internet you expose it to remote security threats and increase the attack surface significantly.

In 2017 the US Food and Drug Administration recalled nearly a half a million pacemakers over security issues. And there have been a steady stream of recalls ever since.

Self-driving cars?

Self driving cars? A picture paints a thousand words. The driver of this car was killed, reportedly when using the autopilot.

Click here to harm or kill...

The security implications of the industrial control systems we have now connected to the internet are fathomless.

The Stuxnet attack on the Iranian nuclear program did not succeed because the system was connected to the Net – it was air gapped but nevertheless networked internally. Israeli and US intelligence services recruited an insider to install the Stuxnet worm and infect the SCADA systems, targeting the plant’s Siemens programmable logic controllers, leading to damage to the uranium enrichment centrifuges. SCADA (supervisory control and data acquisition) is basically a piece of kit that controls the PLCs, sensors and activators that monitor and run industrial equipment like pumps, motors, generators and valves.

In December of 2015, Russian hackers, infiltrated three Ukrainian power companies with spear phishing emails, enabling them to take control of the organisations’ SCADA systems with the BlackEnergy malware. They shut down electricity substations and disabled or destroyed various critical systems & components, such as uninterruptable power supplies and commutator electrical switches, leading to regional blackouts.

In December the following year, the same Russian hacking group, Sandworm, attacked the Ukrainian power grid again with an even more sophisticated piece of malware, Industroyer, which automated some of the damage that with BlackEnergy required direct keyboard inputs from the hackers. Industroyer, also called CrashOverride, was specifically designed to target electrical grids and caused blackouts in Ukraine’s capital, Kyiv. I’d recommend Andy Greenberg’s book on Sandworm if you’d like to know more about these attacks and related stories.

Water plant hacks

There have been a series of remote cyber attacks attempting to poison or compromise US water supplies, two last year in California and Florida, the year before that in Kansas, plus a string of ransomware attacks on water companies. Critical infrastructure utility companies are considered lucrative targets by ransomware criminals because they cannot afford to be out of operation for long periods.

Triton attack on Saudi petrochemical plant

Triton was a malware attack on a giant Saudi Arabian petrochemical plant in 2017. The malware which compromised the plant’s safety control systems, specifically Triconex safety controllers, was discovered by incident response teams after the attackers, seemingly accidentally, shut down the plant on a quiet Friday night.

The attackers, having compromised the company’s computer systems back in 2014, had spent 3 years building up the capability to inflict a lethal disaster, remotely, through the release of and/or igniting of vast quantities of toxic and explosive chemicals, like hydrogen sulphide.

Triton has been called the world’s most murderous malware.

Triton and the people behind it have not gone away and are continuing to deploy newer versions of the malware to manipulate and compromise industrial control and safety systems globally.

Then you have actual killing machines… NO not Arnie…

I’m just going to run part of a short video at this point. {Ran about 2m 40s of video}

Ok that's enough of that. Amm The slaughterbots in the film are fictional but, given the nature of the arms industry, we can be confident, on the balance of probabilities, that weapons like this are in development. As the Berkeley computer science professor who produced the video, Stuart Russell, says, it shows the results of integrating and miniaturising existing technology. The potential of AI to benefit society is huge but creating autonomous killer machines, as we are doing – giving machines the power to choose to kill - will be devastating to our security and freedom. Thousands of researchers agree but the window to act to prevent the kind of future you saw in that video is closing.

Now the UK government produced a policy paper on defence in the summer of this year that basically said that human oversight would be an unnecessary restraint on AI. The paper precise quote says that real time human supervision of such systems “may act as an unnecessary and inappropriate constraint on operational performance”. Hard to believe really but the defence minister, Baroness Goldie defended this position in the House of Lords in August.

The US, Russia, India, Australia all agree. Brazil, South Africa, New Zealand, Switzerland, France & Germany want bans or restrictions on this technology.

Last year 120 countries at the UN weapons convention couldn't agree to limit or ban the development of lethal autonomous weapons. They pledged, instead, to continue and “intensify” discussions.

The first known use of an autonomous killing machine was in the Libyan civil war, early in 2020 according to the UN. In 2021 the New York Times reported that Israeli agents used an AI assisted 7.62mm sniper machine gun with face recognition capability to assassinate an Iranian nuclear scientist. Allegedly the face recognition technology was able to decide that his wife who was in the car with him shouldn't be killed.

AI is deciding who lives or dies.

Is that really acceptable?

Real killer drones

Ok what about the real killer drones. The one on the left is a Reaper drone loaded with a couple of Hellfire missiles, potentially primed to get dropped on the heads of somebody on the US kill list, based on the location of their mobile phone. The former head of the NSA and CIA, General Michael Hayden said in 2014 “We kill people based on metadata.”

Just pause and think about that… we KILL people based on metadata.

The little drone that can be launched like a paper plane is just a reconnaissance & surveillance device. Not yet as small as the currently fictional slaughter bots but heading that way.

It looks like governments are going to be the only entities big enough and tough enough to control these things... at least in killer machines... autonomous killer machines. In democracies we elect people to run our governments, eh... well... maybe not in the UK in recent times. So we’re going to have to get back to those politicians again. Maybe the most internationally respected types should be the ones to look to? How about former US president, Barack Obama?

Weeeellll Obama didn’t have a great track record when it came to killer drones. Just by the numbers alone, there were ten times more air strikes during Obama’s presidency than under his predecessor, George W. Bush. Obama presided over more drone strikes in his first year than Bush in his whole presidency.

According to Gregoire Chamayou in his deeply affecting book on the US drone program, Obama had meetings, every Tuesday, where kill lists of people considered enemies of the US were approved by the president for extra-judicial despatch from this earthly domain. Eh these meetings were dubbed terror Tuesday meetings by the media. The Obama administration had largely suspended Bush and Cheney’s CIA rendition for torture programme and replaced it with drone killings. Like the Bush Cheney regime’s kidnap, torture and bombing which became known as extraordinary rendition, enhanced interrogation, shock & awe, Obama’s kill list got its own translation from the spin doctors – they called it the disposition matrix.

So maybe Obama and his ilk are not our answer to the computing machine control problem either.

Drone Theory

Gregoire Chamayou’s book requires a slide all of its own. It's one of the most profound books I have ever encountered, it should be compulsory reading for all academics and university students whatever their discipline.

A word from my favourite philospher...

I’m going to conclude by noting that technology is a sociotechnical phenomenon – it is intensely human and dependent on human choices. We didn’t have to build a surveillance society and additionally wire it with billions of insecure physical devices and systems, the operation or compromising of which can be hazardous to the point of life threatening. That was the emergent and cumulative effect of millions of human design, political, developmental, economic, environmental & operational choices.

Much like the climate crisis to which it is, additionally, a significant contributory factor, we have a very real and growing digital environmental crisis which humanity is utterly failing to address. Politicians, ‘sentient’ AI, governments, the market, the big five tech giants or their commercial peers, rivals and enablers, billionaire techbros, police, the military or ourselves will not control the machines, on our current trajectory.

Serious new directions are called for.

Tune in next time for:

How we should control the computerised machines: a wake-up call to arms (and not the killing machine type)

Thank you for your attention, indulgence and stamina on a cooling Friday evening. It is a genuine privilege and a pleasure to be amongst a gathering of tutors again. You good folks are the heart and soul of this university.